Google VEO-3 Watermarking: The New Standard for AI Video Trust, Transparency, and Visibility

Google VEO-3 watermarking is more than a subtle disclosure label. It’s the backbone of how Google will verify, interpret, and surface AI-generated video across its ecosystem. Competitors frame the watermark as “small,” “barely visible,” or “a transparency update,” but the impact goes far deeper.

VEO-3 watermarking is the first formal step in a system where trust signals will determine whether AI video is ranked, recommended, or restricted. The watermark is subtle by design, but its influence on visibility is not.

Here’s the strategic breakdown most teams overlook.

What Google VEO-3 Watermarking Actually Does

Google VEO-3 watermarking embeds a machine-readable authenticity stamp into AI-generated video. It verifies:

- the model used to generate the content

- the version and creation pathway

- the presence of compliant provenance data

- that the video is eligible for AI video classification

The watermark is “small” and intentionally unobtrusive. But subtle doesn’t mean insignificant. Google designed it to avoid interrupting the viewer experience while still giving its AI verification systems the signals they need to establish trust.

The watermark is not for humans — it’s for machines.

Why Google Chose a Subtle Watermark

BGR and Mashable highlight how understated the new watermark is. That’s intentional. If Google made the watermark obvious or intrusive, it would disrupt storytelling and harm adoption. But if it removed watermarking altogether, synthetic content could spread without accountability.

Google opted for the middle path: a watermark you won’t notice but AI systems will.

This gives Google flexibility to:

- label AI video responsibly

- maintain user experience

- enforce authenticity standards behind the scenes

- prepare for upcoming global regulations

The small size isn’t a flaw. It’s a strategy.

How Google Actually Uses the Watermark — Beyond Simple Transparency

Most people think watermarking exists only to signal transparency or tell viewers that a video was generated with AI. But inside Google’s ecosystem, the watermark plays a far more critical role. It acts as the first verification checkpoint in Google’s multimodal evaluation pipeline, determining whether the system can trust, classify, and surface the video accurately.

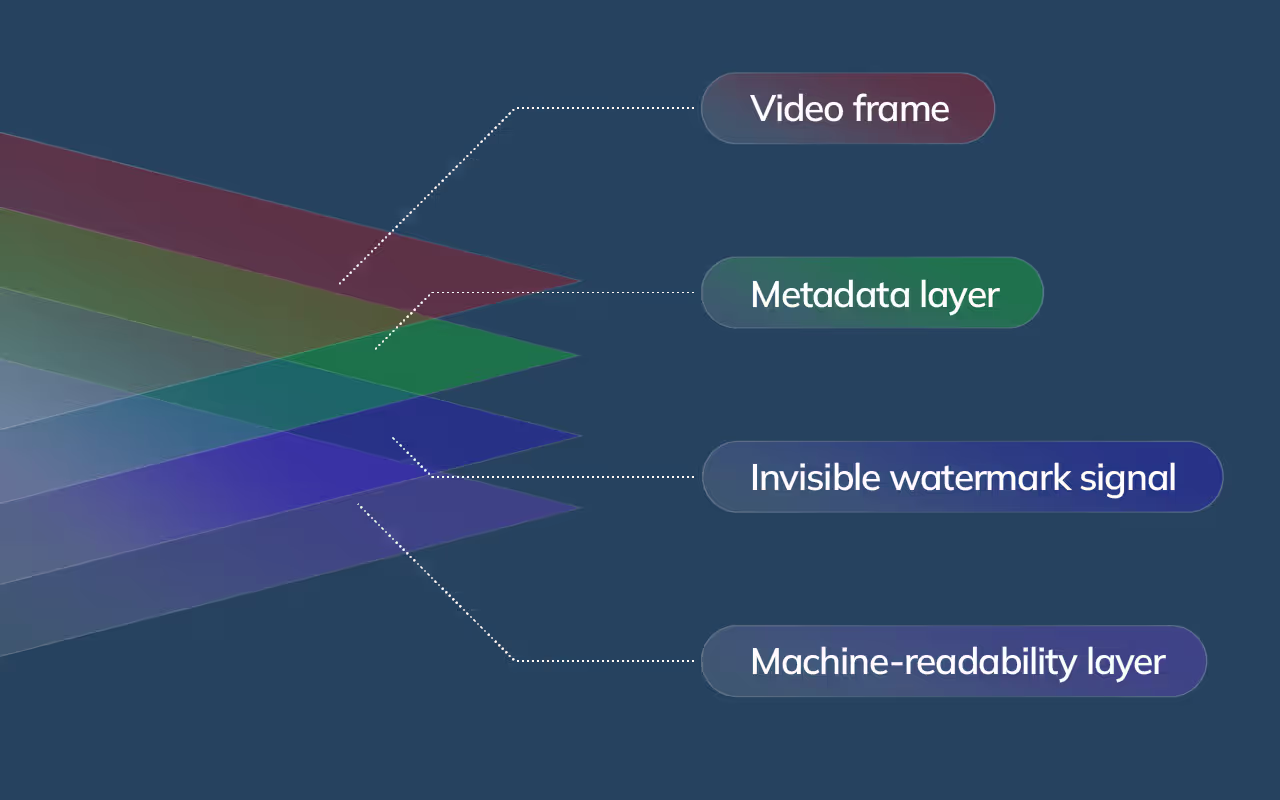

Once the watermark is detected and validated, Google begins deeper interpretation across multiple layers, including:

- scene and object detection

- entity relationships

- motion physics and continuity

- lighting logic and environment accuracy

- narrative and semantic meaning

- alignment between visuals and metadata

- accuracy of structured data and schema

These signals work together to help Google understand what the video is, how it was generated, and whether it can be safely recommended.

If a watermark is missing, corrupted, or inconsistent with the video’s metadata, Google treats the asset as unverified AI content, which can:

- reduce search and discovery visibility

- limit how often the video appears in recommendations

- exclude it from AI Overviews or certain product surfaces

- trigger disclosure or safety review mechanisms

This is why VEO-3 watermarking is not just an authenticity label. It is a gatekeeper signal that determines whether Google will fully index, classify, and surface the content.

Read more: Real marketing use cases for Google VEO-3.

Why Watermarking Alone Doesn’t Solve AI Video Integrity

Watermarking is often misunderstood as a complete solution for verifying AI-generated content, but Google never designed it to operate in isolation. The watermark is only one part of a broader multi-signal integrity framework that determines whether a video can be trusted, indexed, and distributed.

Google evaluates AI video using a layered approach that includes:

- provenance and creation metadata

- prompt lineage and model attribution

- structured data describing entities and scenes

- safety and policy classification

- content stability and visual consistency

- user-provided disclosures and contextual information

If a watermark is removed or altered, these additional signals still inform Google’s assessment. Integrity is not determined by a single indicator — it emerges from the alignment of all signals working together.

Because of this, brands producing AI video at scale now require dedicated oversight of:

- authenticity and provenance signals

- metadata engineering and optimization

- schema and structured data architecture

- compliance and disclosure alignment

- semantic consistency between visuals and metadata

- indexing readiness across Google’s surfaces

This integrated approach ensures the video is not only generated well but also interpreted correctly by Google’s systems.

Discover: Compare Google VEO-3 with Runway ML in this bog.

What This Means for Brands Using AI Video

Google’s adoption of VEO-3 watermarking sends a clear message:

AI video is welcome, but only if it is transparent, traceable, and machine-readable.

For brands, this means the production pipeline must evolve. Teams should now ensure:

✔ Valid watermarking on every asset

Even minor export changes or compression errors can weaken the trust signal.

✔ Metadata that reflects what the video truly shows

Semantic alignment prevents misclassification and ranking loss.

✔ Structured data that reinforces the video’s meaning

Schema helps Google interpret scenes with accuracy and stability.

✔ Correct and consistent synthetic media disclosures

Missing or inaccurate disclosure can trigger suppression.

✔ Provenance that matches the watermark and metadata

Google checks whether signals agree with each other, not just whether they exist.

The brands accelerating fastest with AI video are the ones approaching it as a governed process, not just a creative exercise. Prompting generates the footage — governance ensures it performs.

How VEO-3 Watermarking Affects Discoverability

Many assume watermarking is solely about transparency for viewers, but its impact extends directly into search and recommendation systems. Google prioritizes content it can verify, because verified content reduces the risk of misinformation and improves classification accuracy.

Watermarked videos benefit from:

- stronger authenticity and trust signals

- fewer indexing or categorization errors

- reduced risk of restriction or downgrading

- higher eligibility for AI Overviews and enriched results

- more stable performance across Google products over time

Conversely, videos without valid watermarking — or with signals that conflict with their metadata — may experience:

- lower ranking

- incorrect topic assignment

- exclusion from recommendation feeds

- additional safety review or limited distribution

Watermarking directly influences visibility, even though users never see it.

Trust signals shape performance.

Explore more: How a Google VEO-3 Editor improves trust & visibility.

[blog-cta_component]

How Founders Can Stay Ahead of VEO-3 Visibility

For startups moving fast with Google VEO-3, the biggest shift is recognizing that AI video is no longer just a creative asset — it is an operational asset. If you want your videos to surface reliably, rank consistently, and scale without friction, you need lightweight governance built directly into your workflow.

Here’s what founders and lean teams should prioritize:

Implement Authenticity Checks for Every Video

Before publishing anything, verify that the watermark is still intact after editing or export. Early-stage teams move quickly, and small changes — compressing a file, trimming a clip, adjusting color — can unintentionally weaken the signal. Authenticity checks prevent silent failures that limit visibility.

Enforce Metadata Discipline Across All Content

Metadata is often an afterthought for fast-moving teams, but with VEO-3 it directly affects performance. Build a simple rule:

every piece of video must have metadata that matches exactly what appears on screen.

This alone dramatically reduces misclassification and boosts discoverability.

Add Basic Schema to Make Videos Machine-Readable

Startups don’t need enterprise-grade schema systems. A minimal structured data layer — describing the topic, entities, and key moments — is enough to help Google interpret your videos more confidently. Think of schema as clarity for machines, not complexity for your team.

Integrate Lightweight Compliance and Disclosure Checks

As regulations and platform rules evolve, undisclosed synthetic content can get suppressed. Create a simple checklist or automated reminder to ensure each video includes the required AI-generated content disclosures. This protects reach without slowing teams down.

Track Prompts, Versions, and Provenance as You Scale Output

High-velocity content operations generate dozens or hundreds of variations. Without version control, it becomes impossible to debug performance issues or verify provenance. A lightweight spreadsheet or mini-repository is often enough for early teams.

Why This Matters for Startups

Startups win by moving fast — but in the VEO-3 era, speed without structure leads to visibility loss, inconsistent performance, and tangled content pipelines. The companies that scale AI video effectively are the ones that turn trust signals into a simple, repeatable process.

AI video creation is easy.

AI video reliability is the differentiator.

Watermarking is the first signal Google checks. Everything else you implement determines whether your content scales with you — or slows you down.

AI Video Trust Readiness Checklist

Before any AI-generated video is published, teams can use this checklist to determine whether the asset is fully prepared for Google’s verification, classification, and discovery systems. Each item represents a requirement in Google’s emerging trust and interpretability pipeline.

A video is distribution-ready when it meets all of the following criteria:

☐ Watermark validated, intact, and unaltered

Confirm that the VEO-3 watermark remains machine-detectable after editing, resizing, or compression. Even minor codec changes can weaken authenticity signals.

☐ Metadata accurately reflects each major scene

Descriptions, captions, and titles should match what the video visually depicts. Misalignment leads to misclassification and ranking loss.

☐ Schema markup reinforces objects, actions, and entities

Structured data should support what Google’s models detect — providing clarity around events, characters, settings, and transitions.

☐ Correct synthetic media disclosures included

Videos must contain the right labels or contextual disclosures to satisfy both platform policies and emerging regulations.

☐ Provenance signals are complete and consistent

Model version, prompt lineage, and generation pathways should align with the watermark and metadata. Inconsistencies weaken trust.

☐ No semantic mismatches between visuals and text

Ensure there are no contradictions between the video, captions, metadata, structured data, or narrative intent.

☐ Authenticity, compliance, and safety checks passed

Verify that the content meets internal safety standards, Google’s AI video policies, and any industry-specific compliance rules.

☐ Prompt, revision logs, and model version documented

Maintaining transparent version control strengthens provenance, simplifies audits, and improves long-term trust signals.

How to Interpret the Checklist

- All boxes checked → Your video is optimized for trust, visibility, and stable indexing.

- One unchecked → Review required to prevent misclassification or reduced ranking.

- Two or more unchecked → Visibility is at risk, and the video may be suppressed, miscategorized, or excluded from recommendation systems.

This checklist turns AI video production into a predictable workflow — not an unpredictable gamble.

Why Google VEO-3’s Watermarking Update Signals a New Era for AI Video

VEO-3 watermarking is not just a technical improvement — it marks a fundamental shift in how AI-generated video will be validated, classified, and surfaced across Google’s ecosystem. For the first time, Google is signaling that verification is a prerequisite for distribution, not an optional enhancement.

Three accelerating forces explain why this shift is happening now:

AI video realism is outpacing audience trust

AI-generated video has reached a level of visual accuracy—lighting, motion physics, facial dynamics, environmental coherence—where viewers can no longer reliably distinguish synthetic footage from real footage. Without built-in verification signals, platforms risk misinformation, misrepresentation, and erosion of public trust.

Watermarking allows Google to maintain confidence in the authenticity of what it surfaces, even as realism continues to advance.

Global regulations are moving toward mandatory provenance

From upcoming EU AI rules to U.S. discussions around synthetic media labeling, governments are preparing enforceable standards for provenance, traceability, and AI disclosures. Google is not waiting for regulation to be finalized; it is building the compliance foundation now.

Watermarking is Google’s early alignment with a world where verifiable AI content is not optional — it is required.

Machine-readable signals are becoming the backbone of modern search

Search is shifting from keyword-driven indexing to model-based interpretation. Google’s systems rely on signals they can validate, cross-check, and interpret semantically. Watermarking gives Google an anchor signal to begin that interpretation process.

In this new landscape, a video’s visibility depends less on production quality and more on how reliably Google can understand and trust the asset.

A Structural Shift, Not a Small Feature Update

These forces together make watermarking far more than a disclosure tool. It represents a structural change in how AI video must be created, governed, and prepared for distribution. Going forward, unverified or poorly signaled AI video will struggle to rank, surface, and maintain visibility — regardless of its aesthetic quality.

This is the moment where teams must move from creating AI video to governing AI video. Google’s watermarking update is the first clear indicator of that transition.

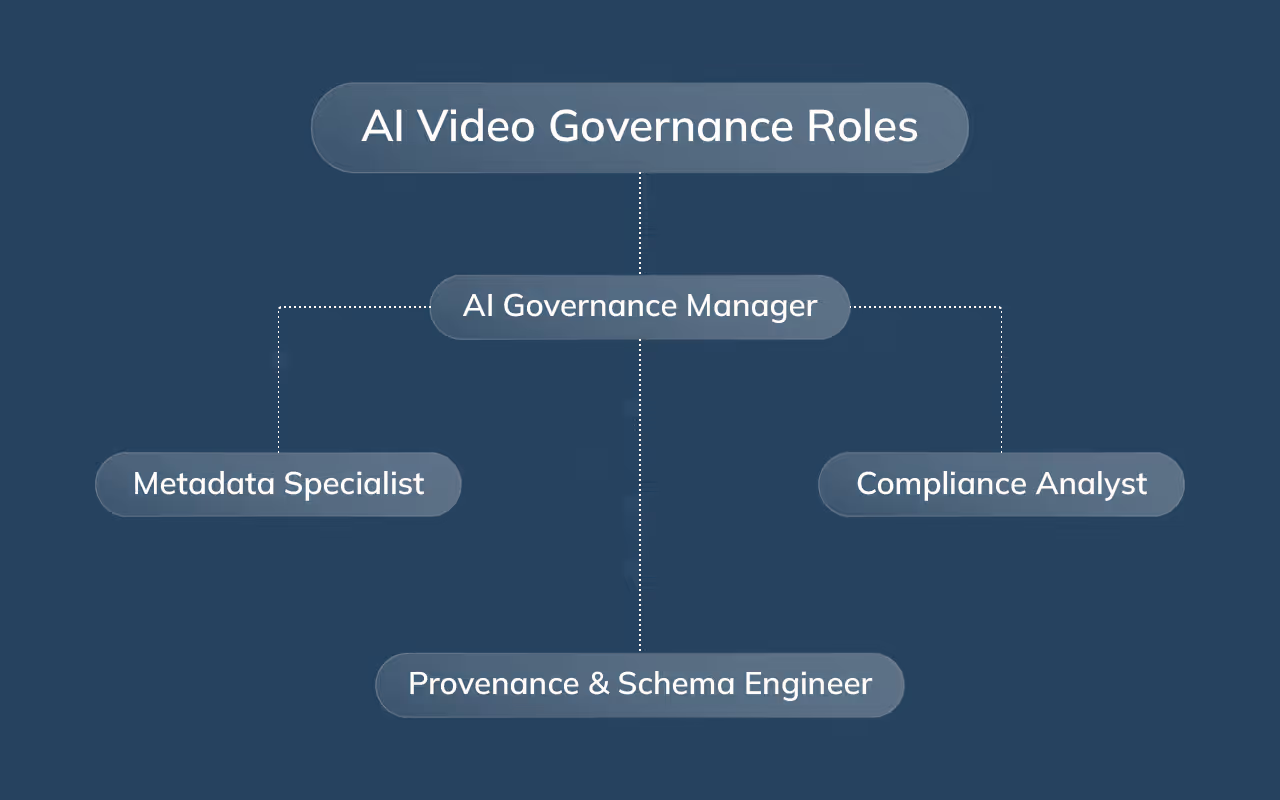

The Rise of AI Video Governance Roles

As Google formalizes how it verifies and evaluates synthetic video, a new operational reality is emerging for businesses: creating AI video is no longer enough. Teams must now prove that their content is authentic, traceable, compliant, and machine-readable. This requires skill sets that traditional creative, marketing, or engineering teams were never designed to handle.

AI video governance sits at the intersection of three disciplines:

- Engineering, where model behavior, metadata, and provenance must be understood

- Creative, where narrative and visual meaning must align with technical signals

- Compliance, where safety, disclosure, and regulatory standards must be met

No single legacy role covers all of this. That’s why entirely new functions are now appearing inside AI-forward organizations.

What These Specialists Actually Do

To ensure that AI-generated videos pass Google’s verification and ranking pipeline, companies now need experts who can manage:

- Provenance workflows – tracking the origin, model version, and generation pathway

- Prompt lineage documentation – mapping changes between versions to maintain consistency

- Metadata and schema design – ensuring Google can interpret entities, actions, and scenes

- Trust and safety compliance – aligning videos with synthetic media disclosure policies

- AI model versioning – understanding how model changes impact outputs and indexing

- Semantic auditing – checking whether visuals, captions, and structured data tell the same story

These responsibilities determine whether a video is eligible for full visibility—not just whether it looks polished.

The New Roles Being Created

As a result, businesses are formalizing this work into specialized positions, including:

- VEO-3 Metadata Specialist – oversees metadata accuracy and machine-readability

- AI Content Governance Manager – ensures alignment with policy, disclosure, and trust standards

- Synthetic Media Compliance Analyst – monitors and enforces emerging global regulations

- AI Provenance & Schema Engineer – builds the technical infrastructure behind traceability

These roles didn’t exist two years ago, yet they are becoming foundational to any brand producing AI video at meaningful scale.

Why These Roles Matter Now

Without governance specialists:

- videos may be misclassified

- trust signals may break

- schema may contradict visual content

- disclosures may be incomplete

- indexing may fail

- visibility may drop unpredictably

Governance is what bridges the gap between creating AI video and ensuring Google can trust it enough to surface it widely.

In short, these new roles ensure that AI video isn’t just compelling to humans — it is fully interpretable, verifiable, and indexable by machines.

Read more: Dive deeper into the emerging Google VEO-3 Specialist role.

Build a Team That Keeps Your AI Video Visible, Compliant, and Scalable

Scaling AI video responsibly requires more than good prompts. It requires specialists who understand watermarking integrity, metadata accuracy, schema design, provenance tracking, and synthetic media compliance.

Hire Overseas helps founders build the global talent infrastructure behind these new workflows — the VEO-3 specialists, metadata engineers, AI governance analysts, and provenance managers who ensure your content is fully trusted by Google’s systems.

If you want to stay ahead of the visibility curve and build AI video operations that scale without bottlenecks…

Book a personalized consultation with us today.

FAQs About Google VEO-3 Watermarking

Is Google VEO-3 watermarking mandatory for all AI-generated videos?

While Google has not framed watermarking as a legal requirement, it is effectively mandatory for discoverability. Videos without valid watermarking are treated as unverified synthetic content, which can limit ranking, indexing, and recommendation eligibility across Google surfaces.

Can traditional editing software accidentally remove or weaken a VEO-3 watermark?

Yes. Common workflows such as re-encoding, compressing, trimming, stabilizing, or applying aggressive color grading can degrade the embedded watermark signal. This is why post-export authenticity checks are essential before publishing.

How does Google detect tampering attempts with a VEO-3 watermark?

Google cross-references the watermark with multiple parallel signals—provenance metadata, model lineage, structured data, and semantic interpretation. If any component conflicts with the watermark, the system flags the asset as inconsistent or unverified, reducing visibility.

What happens if a brand publishes both watermarked and non-watermarked versions of the same video?

Google may treat them as separate assets with different trust levels. The non-watermarked version risks lower ranking and may confuse indexing systems, creating fragmented search performance across duplicates.

Does watermarking influence how Google attributes authorship or copyright?

The watermark authenticates AI generation pathways but does not replace copyright declarations. Brands still need traditional copyright metadata to establish ownership and distribution rights.

How does watermarking interact with misinformation detection?

Verified watermark signals help Google distinguish legitimate AI content from deceptive or unlabeled synthetic media. Videos lacking these signals may be routed through additional safety filters that restrict distribution.

Will future regulations require brands to prove watermark compliance?

Likely. Global AI legislation is trending toward enforceable provenance requirements. Google’s watermarking system is positioned to become the technical foundation that brands use to demonstrate regulatory adherence in audits or disclosures.

Unlock Global Talent with Ease

Hire Overseas streamlines your hiring process from start to finish, connecting you with top global talent.

Unlock Global Talent with Ease

Hire Overseas streamlines your hiring process from start to finish, connecting you with top global talent.